Introduction

Breast cancer remains one of the most common cancers worldwide, affecting millions of individuals and posing significant challenges to health systems. According to the World Health Organization, it is the most prevalent cancer among women, impacting not only those diagnosed but also their families and communities. The global burden of breast cancer underscores the urgency for more effective diagnostic and treatment strategies.

Despite advances in medical technology, current diagnostic methods for breast cancer often face critical limitations. Traditional diagnostic tools can sometimes result in delayed or inaccurate diagnoses, particularly in distinguishing between different subtypes of the disease. This can lead to suboptimal treatment plans and outcomes for patients. The need for innovation in this area is clear—there is a critical demand for more precise, reliable, and early detection techniques.

Enter machine learning—a transformative technology that holds the promise of revolutionizing medical diagnostics. Machine learning offers a powerful tool for the medical field, capable of analyzing large datasets and identifying patterns that might elude human experts. In the context of breast cancer, machine learning models are being trained to sift through vast amounts of medical data, including genetic information, to predict disease presence, progression, and response to treatments. This capability not only enhances diagnostic accuracy but also facilitates personalized medicine approaches that can dramatically improve patient care outcomes.

By integrating machine learning into medical diagnostics, we are on the cusp of a major leap forward in how we diagnose and treat breast cancer, promising to reshape the future of healthcare.

Basics of Machine Learning in Medicine

Understanding Machine Learning

Machine learning (ML) is a branch of artificial intelligence that focuses on building systems that learn from data, rather than being explicitly programmed to perform a specific task. Imagine teaching a computer to recognize patterns the way humans do, but at a scale and speed that far exceed human capabilities. At its core, machine learning involves feeding a computer algorithm an enormous amount of data and allowing it to learn and make informed decisions based on that data. In the context of medicine, this means a machine learning model can learn to detect diseases from various inputs such as lab results, images, or genetic information.

Training Models

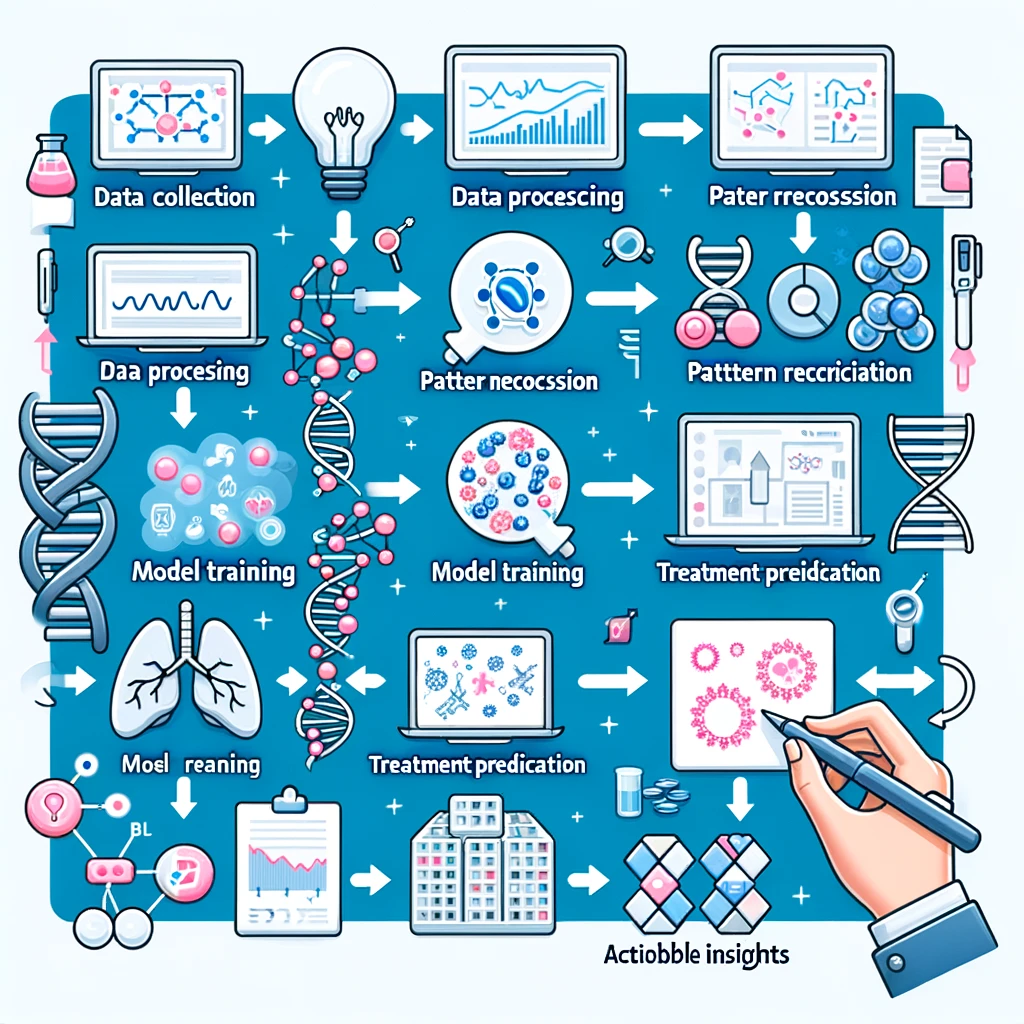

The power of machine learning in medicine is realized through the training of models using historical medical data. This process involves several steps:

- Data Collection: Gathering comprehensive historical health data, which could include diagnoses, imaging, lab results, and patient outcomes.

- Data Preparation: Cleaning and organizing this data to ensure it is accurate and uniform. This often involves removing errors, filling in missing values, and standardizing data formats.

- Model Training: Feeding the prepared data into a machine learning algorithm. The model learns by finding patterns and relationships in the data, such as identifying the characteristics that distinguish benign from malignant tumors.

- Model Testing: Evaluating the model’s accuracy using new, unseen data. This is crucial to ensure the model can generalize its predictions to real-world situations, not just to the data on which it was trained.

Through this process, machine learning models can predict outcomes, such as whether a tumor is likely to be cancerous, or diagnose a disease from symptoms and test results. The goal is to use these predictions to support doctors and healthcare providers in making faster, more accurate decisions.

Model Training

Model training is a crucial step in the machine learning process where the algorithm learns from data to make future predictions or decisions. Here’s a step-by-step breakdown of how this happens, specifically in the context of medical diagnostics for distinguishing tumor types:

- Feeding Prepared Data: The prepared dataset, which has been cleaned and formatted, is fed into a machine learning algorithm. This data typically includes a variety of features such as patient demographics, imaging data, genetic information, and known outcomes (e.g., benign or malignant).

- Learning from Data: The machine learning model processes this data through its algorithm. For instance, in a supervised learning model, the algorithm is trained on a labeled dataset, where each example is tagged with the correct answer (benign or malignant). The model uses these examples to learn the underlying patterns associated with each outcome.

- Pattern Recognition: As the model sifts through the data, it begins to recognize patterns and relationships. For example, it might identify specific genetic markers or imaging characteristics that are prevalent in malignant tumors but not in benign ones. This pattern recognition is facilitated by the mathematical and statistical methods underlying the machine learning model, such as regression analysis, decision trees, or neural networks.

- Iterative Improvement: The training process is iterative, meaning the model repeatedly goes through the dataset, adjusting its internal parameters to better align its predictions with the real outcomes. Each pass through the data is an opportunity to learn more and refine its predictions.

- Validation of Learning: Throughout the training process, the model’s accuracy is continually assessed by testing it on a separate set of data that it hasn’t seen before, known as validation data. This helps to ensure that the model isn’t just memorizing the data it has seen but is actually learning to generalize from it.

By the end of the training process, the machine learning model aims to have learned enough to accurately predict whether new, unseen examples of tumors are benign or malignant based on the patterns it recognized during training. This capability can significantly enhance diagnostic accuracy and speed, leading to better patient outcomes.

Feeding Prepared Data

Feeding prepared data into a machine learning model is a pivotal step in the training process. This step involves inputting a well-curated and formatted dataset into the algorithm so that it can learn effectively. Here’s a detailed look at what this entails:

- Dataset Composition: The dataset used for training a machine learning model in medical diagnostics usually comprises various types of data that provide comprehensive insights into patient health. For cancer diagnostics, specifically, this might include:

- Patient Demographics: Information such as age, sex, and ethnicity, which can influence the likelihood of disease and its presentation.

- Imaging Data: Radiological images like mammograms, MRIs, or CT scans that visually depict the physical characteristics of tumors.

- Genetic Information: Data on specific genes or broader genomic patterns that have been associated with cancer risks or types. For example, mutations in the BRCA1 and BRCA2 genes are linked with higher risks of developing breast cancer.

- Known Outcomes: Each data point includes a label indicating whether the tumor was benign or malignant, as determined by prior medical diagnosis. This is critical for supervised learning models, where the algorithm learns to associate data features with specific outcomes.

- Data Preparation Quality: Before being fed into the model, the data must be meticulously prepared to ensure it is of high quality, which includes:

- Cleaning: Removing or correcting any inaccuracies or inconsistencies in the data, such as missing values or outliers that could mislead the model.

- Formatting: Structuring the data in a way that is compatible with the machine learning algorithm. This might involve normalizing numerical values or encoding categorical data into a format that the algorithm can process.

- Input Process: The prepared data is then inputted into the machine learning algorithm. This process typically involves dividing the data into batches or subsets, which the model will process sequentially. This method helps in managing computational resources and allows for iterative adjustments as the model learns.

- Feature Selection: At this stage, not all features might be equally informative for making predictions. Feature selection techniques might be employed to identify and retain the most relevant features that contribute significantly to the model’s prediction accuracy.

By feeding the model with high-quality, well-prepared data, you enhance its ability to learn effectively and make accurate predictions. This stage is crucial because the quality and format of the data directly impact the model’s performance and its subsequent utility in clinical settings.

Patient Demographics in Machine Learning Models

Patient demographics are crucial variables that significantly influence machine learning models in medical diagnostics, especially in fields like oncology. Here’s a detailed look at how demographics such as age, sex, and ethnicity are used and why they are important:

- Age: Age is a critical factor in many diseases, including cancer. For instance, the risk of developing breast cancer increases with age. By including age as a feature in a machine learning model, the algorithm can adjust its predictions based on age-related risk factors and prevalence. For example, certain types of tumors might be more common or aggressive in older patients, influencing diagnosis and treatment options.

- Sex: Many diseases manifest differently in males and females due to biological and genetic differences. In the context of breast cancer, while predominantly a female disease, it’s essential to consider that males can also develop breast cancer, albeit less frequently. A machine learning model that includes sex as a variable can better tailor its diagnostic predictions and recognize patterns specific to males and females.

- Ethnicity: Ethnic background can influence the prevalence and outcomes of diseases due to genetic, environmental, and socio-economic factors. For example, certain genetic mutations linked to breast cancer, like those in the BRCA1 and BRCA2 genes, are found with varying frequencies across different ethnic groups. Including ethnicity in a machine learning model helps to account for these variations and improve the accuracy and applicability of its predictions across diverse populations.

Utilizing Demographics in Model Training and Predictions

Incorporating patient demographics into a machine learning model involves:

- Data Collection and Input: Gathering comprehensive demographic information as part of the patient data collected for model training. This data needs to be accurately recorded and consistently formatted to be useful in training.

- Feature Engineering: Transforming raw demographic data into formats that can be effectively used by machine learning algorithms. This might involve encoding categorical data (like ethnicity) into numerical values or creating age groups to better capture age-related trends.

- Model Adjustment: Algorithms may adjust their predictions based on demographic features. For instance, a model might learn from training data that younger women with a specific tumor type have a better prognosis, which could influence diagnostic or prognostic outcomes.

Implications of Including Demographics

Including patient demographics in machine learning models has several implications:

- Improved Personalization: By accounting for patient-specific factors like age, sex, and ethnicity, machine learning models can provide more personalized and accurate diagnostics.

- Enhanced Predictive Accuracy: Demographics can help models distinguish between diseases with similar symptoms but different implications in different demographic groups.

- Ethical Considerations: Care must be taken to ensure that the use of demographics does not reinforce biases or lead to disparities in healthcare outcomes. Models must be regularly evaluated and validated across diverse demographic groups to ensure equity in healthcare diagnostics.

In summary, patient demographics are not just additional data points but are integral to the nuanced understanding and prediction of diseases in machine learning applications in medicine. They enable models to make more informed and tailored predictions, ultimately leading to better patient care and outcomes.

Labeled Dataset

In supervised learning, a labeled dataset is crucial as it provides the necessary framework for teaching the model what to predict. This dataset is composed of numerous records, each featuring:

- Input Features: These are the variables or attributes that describe each instance in the dataset. In the context of tumor classification, these features could include:

- Patient Age: Often correlates with different cancer risks and types.

- Imaging Data: Images from mammograms, MRIs, or CT scans that show physical characteristics of the tumor.

- Biopsy Results: Microscopic examination results that provide definitive evidence of the presence or absence of cancer cells.

- Genetic Markers: Information on genetic variations that may influence the likelihood of developing malignant tumors.

- Clinical History: Previous medical history that might affect cancer risk or diagnosis.

- Labels: Each record in the dataset also includes a label, which is the outcome the model needs to predict. For tumor classification, the labels would typically be ‘benign’ or ‘malignant’. These labels are considered the “ground truth”, used during the training phase to guide the model’s learning process.

Function of the Labeled Dataset

- Training the Model: The primary use of a labeled dataset is to train the machine learning model. By exposing the model to a wide array of examples, each paired with the correct answer, the model learns to associate specific patterns and characteristics in the input features with the corresponding outcome.

- Supervised Learning Process: During training, the algorithm adjusts its parameters to minimize the difference between its predictions and the actual labels. This process involves error minimization techniques, where the model learns to correct its mistakes based on the feedback received from comparing its predictions against the true labels.

- Assessing Model Performance: The labeled dataset can also be split into a training set and a testing set. The model is trained on the training set, while the testing set is used to evaluate how well the model performs on data it has not seen before. This helps in understanding the model’s ability to generalize beyond the training data.

Importance in Medical Diagnostics

In medical diagnostics, the accuracy of the labeled data is paramount because any error in the labels can lead to incorrect learning, thereby affecting the model’s predictions. Ensuring high-quality, accurately labeled data is thus critical for developing reliable and effective diagnostic tools. This data not only trains the model to recognize the presence of tumors but also aids in distinguishing between benign and malignant cases, which is crucial for determining the appropriate treatment paths.

Imaging Data in Machine Learning

- Types of Imaging Data:

- Mammograms: These are X-ray images of the breast that are commonly used for routine screening of breast cancer. They can show tumors before they can be felt and can also display microcalcifications (tiny deposits of calcium in the breast) that sometimes indicate the presence of breast cancer.

- Magnetic Resonance Imaging (MRI): MRI uses magnetic fields and radio waves to produce detailed images of the inside of the body. In cancer diagnostics, MRIs are particularly useful for examining soft tissues and determining the extent of tumor involvement without exposure to radiation.

- Computed Tomography (CT) Scans: CT scans use X-rays to create detailed cross-sectional images of the body. They can be essential in examining the size and location of tumors in the body and checking if cancer has spread to other areas.

- Features Extracted from Imaging Data:

- Tumor Size and Shape: Machine learning models can analyze images to determine tumor size, shape, and volume, which are critical factors in many diagnostic and treatment decisions.

- Texture Analysis: This involves analyzing the texture of the tumor and surrounding tissues. Textural features can provide information about the heterogeneity of the tumor, which can be indicative of its malignancy.

- Tumor Margin: The characteristics of the tumor’s edges—whether they are well-defined, irregular, or spiculated—can be significant indicators of malignancy and are used by models to differentiate between benign and malignant tumors.

- Role in Model Training:

- Training Data: Imaging data forms a part of the training dataset where each image is labeled with diagnostic information (e.g., benign or malignant). This allows the machine learning model to learn the visual patterns associated with different types of tumors.

- Feature Engineering: Before feeding into a machine learning model, images are often processed through feature engineering to highlight important aspects, such as edges, contrasts, or specific areas. This processing makes it easier for the model to learn from the visual data.

- Data Augmentation: To increase the robustness of the model and prevent overfitting, imaging data is often augmented. Techniques such as rotation, zoom, and flip are used to create varied data scenarios from a single image, enhancing the model’s ability to generalize across new, unseen images.

- Challenges with Imaging Data:

- High Dimensionality: Imaging data often contains high-dimensional datasets that require significant computational resources to process.

- Standardization: Variability in how images are taken (different machines, settings, etc.) can affect the consistency of the data, requiring rigorous preprocessing to standardize images for effective model training.

Imaging data, with its rich, detailed representation of physical characteristics, provides invaluable insights for machine learning models in medical diagnostics. By effectively leveraging techniques to extract, process, and learn from imaging data, these models offer the potential to significantly enhance the accuracy and effectiveness of cancer diagnostics, leading to better patient outcomes.

Tumor Size, Shape, and Volume Analysis

- Importance of Tumor Characteristics:

- Tumor Size: The size of a tumor is a vital parameter that often influences the stage of the cancer and the treatment approach. Larger tumors might suggest a more advanced disease or a lower likelihood of successful removal with surgery.

- Tumor Shape: The shape of a tumor can indicate its growth pattern and aggressiveness. Irregular or lobulated shapes might be associated with more aggressive types of cancer.

- Tumor Volume: Volume provides a three-dimensional assessment of the tumor, offering a more comprehensive view than size alone. This metric can be crucial for planning treatments like radiation therapy, where the volume of tissue that needs to be targeted is critical.

- Machine Learning in Imaging Analysis:

- Image Segmentation: Machine learning models, particularly those using convolutional neural networks (CNNs), are adept at segmenting medical images. This process involves dividing the image to isolate the tumor from normal tissues, allowing for precise measurements of its size, shape, and volume.

- Feature Extraction: After segmentation, the models extract features related to the shape and size of the tumor. This might involve calculating dimensions, perimeter, area, and other geometric properties that define the tumor’s morphology.

- Quantitative Analysis: Beyond visual assessment, machine learning enables quantitative analysis of tumor characteristics. This approach allows for the objective and reproducible measurement of tumor dimensions, which are critical for monitoring disease progression or response to treatment.

- Applications in Clinical Practice:

- Diagnostic Accuracy: By providing detailed measurements of tumor size, shape, and volume, machine learning models aid clinicians in making more accurate diagnoses and staging cancer more effectively.

- Treatment Planning: Accurate tumor measurements are crucial for planning surgical interventions, radiation therapy, and assessing eligibility for certain treatment protocols. For example, knowing the precise volume of a tumor can help in calculating the dosage of radiation needed or the feasibility of complete surgical resection.

- Prognostic Value: Changes in the size, shape, and volume of tumors over time can provide important prognostic information. Machine learning models can automatically detect and quantify these changes, offering valuable insights into the disease’s progression or regression.

- Challenges and Advancements:

- Standardization: Variability in imaging techniques and equipment can affect the accuracy of tumor measurements. Advances in machine learning are helping to mitigate these issues by standardizing the extraction of features across different imaging modalities.

- Interpretation and Integration: Integrating machine learning-derived measurements into clinical workflows involves challenges, including ensuring that such tools are interpretable and actionable by healthcare professionals.

The ability of machine learning models to analyze and quantify tumor size, shape, and volume from medical images represents a transformative development in oncology. This technology not only enhances diagnostic precision but also significantly impacts treatment planning and prognosis, ultimately leading to improved patient care outcomes.

Texture Analysis in Medical Imaging

- Understanding Texture Analysis:

- Definition: Texture analysis refers to the process of quantifying the variations in surface characteristics and patterns within an image. In medical imaging, this involves assessing the grayscale and structural variations in images that represent different tissue types.

- Importance: Textural features like smoothness, roughness, regularity, or irregularity can help differentiate between benign and malignant tumors. Tumors with heterogeneous textures often indicate a more aggressive malignancy.

- Methods of Texture Analysis:

- Statistical Methods: These involve calculating statistical parameters from the pixel intensity distributions within an image. Common techniques include gray-level co-occurrence matrix (GLCM), which measures how often pairs of pixel with specific values and in a specified spatial relationship occur in an image, or gray-level run length matrix (GLRLM), which analyzes the length of contiguous runs of pixels having the same gray level value.

- Model-Based Methods: These involve fitting models to the image data to extract texture features. This might include methods like fractal analysis, which evaluates the fractal dimension of textures indicating complexity.

- Transform-Based Methods: Techniques such as Fourier or wavelet transforms are used to analyze the frequency components of the image. These methods can isolate patterns within the tumor that are not easily visible in the spatial domain.

- Role in Tumor Assessment:

- Heterogeneity Detection: Texture analysis can reveal the heterogeneity within a tumor, which is a key indicator of malignancy. More heterogeneous tumors, which display a high degree of variation in texture, are often associated with higher grades of cancer.

- Response to Treatment: Textural analysis can also be used to monitor changes in the tumor’s texture over time, offering insights into how well a tumor is responding to treatment. For instance, a decrease in heterogeneity might suggest that a tumor is responding well to chemotherapy.

- Challenges in Texture Analysis:

- High Dimensionality: Texture features can be highly dimensional, making it challenging to identify which features are most relevant for diagnosis or prognosis.

- Interpretation: While texture analysis can provide detailed data about tumor characteristics, interpreting these features in a clinically meaningful way requires substantial expertise.

- Integration into Clinical Workflows:

- Diagnostic Protocols: Incorporating texture analysis into standardized diagnostic protocols can help refine the assessment of tumor malignancy and guide treatment decisions.

- Prognostic Tools: Textural features are increasingly being considered as potential prognostic markers, helping predict disease outcomes and tailor personalized treatment plans.

Texture analysis is a sophisticated aspect of machine learning applied to medical imaging that enhances our understanding of tumors. By analyzing the texture of tumors and surrounding tissues, clinicians can gain deeper insights into the behavior and potential aggressiveness of cancers, aiding in more accurate diagnoses and personalized treatment strategies.

Genetic Information in Cancer Diagnostics

- Role of Genetic Information:

- Risk Assessment: Certain genetic mutations are known to increase the risk of developing specific types of cancer. For example, mutations in the BRCA1 and BRCA2 genes are well-documented for significantly raising the risk of breast and ovarian cancers. Identifying individuals with these mutations allows for early monitoring and intervention.

- Cancer Typing: Different genetic mutations can influence the type and behavior of a cancer. For instance, mutations in the EGFR gene are prevalent in non-small cell lung cancer and have implications for therapy choices.

- Treatment Decisions: Genetic information guides the selection of targeted therapies that are more likely to be effective based on the genetic profile of a tumor. For example, patients with specific mutations might respond better to certain drugs due to the molecular pathways affected by those mutations.

- Machine Learning and Genetic Data:

- Pattern Recognition: Machine learning models are particularly adept at identifying patterns in large and complex datasets, including genomic data. These models can recognize which genetic mutations are most associated with certain cancer types or outcomes.

- Predictive Modeling: Using genetic data, machine learning algorithms can predict disease progression and response to treatments. This predictive capability is crucial for developing personalized treatment plans that are optimized for the genetic profile of individual patients.

- Integrative Analysis: Often, genetic data is combined with other types of data, such as clinical information, imaging, and laboratory tests, to create comprehensive models that predict outcomes more accurately. Machine learning facilitates the integration of these diverse data types to provide a holistic view of a patient’s condition.

- Challenges in Utilizing Genetic Information:

- Data Complexity: Genetic data is highly complex, involving large volumes of information that can be challenging to analyze and interpret. Machine learning models must be designed to handle the high dimensionality and variability of genetic data.

- Ethical Considerations: The use of genetic information in medicine raises significant ethical concerns, including issues of privacy, consent, and potential discrimination. Proper guidelines and regulations are crucial to ensure ethical handling of genetic data.

- Future Directions:

- Increased Precision: Ongoing advances in machine learning are expected to enhance the precision of genetic analyses in cancer care, leading to more accurate predictions and better targeted therapies.

- Broader Applications: As genetic sequencing becomes more accessible and affordable, its integration into routine cancer care through machine learning models is likely to expand, benefiting a wider range of patients.

Genetic information is a cornerstone of personalized medicine in oncology, offering profound insights into the risk, type, and treatment of cancer. Machine learning models enhance the utility of genetic data by enabling sophisticated analyses that can guide clinical decisions and improve patient outcomes. As technology advances, the integration of genetic insights into cancer care will continue to evolve, promising even more personalized and effective treatments for patients.

Machine Learning and Genetic Data

The application of machine learning in analyzing genetic data has been transformative in oncology, offering new insights into cancer diagnosis, prognosis, and personalized treatment. Here’s how machine learning enhances the utilization of genetic data in medical contexts:

Pattern Recognition

- Identifying Genetic Markers: Machine learning models excel in sifting through vast amounts of genetic information to identify specific mutations associated with increased cancer risk, prognosis, or treatment responses. For example, identifying mutations in the BRCA genes in breast cancer patients helps in assessing risk and planning preventive measures.

- Association with Cancer Types: These models can recognize complex patterns linking certain genetic profiles to specific types of cancer. This capability is crucial for categorizing cancers more precisely beyond traditional histological methods, enabling more targeted therapies.

- Outcome Correlation: Machine learning can analyze how particular genetic variations correlate with patient outcomes, such as survival rates, recurrence, and response to different treatments, enhancing the predictive accuracy of clinical assessments.

Predictive Modeling

- Disease Progression: By integrating genetic markers with other patient data, machine learning models can predict the likely progression of cancer in an individual. This helps in choosing the most effective therapeutic strategies early in the treatment process.

- Treatment Response: Genetic data can predict how a patient will respond to specific treatments. For instance, the presence of certain genetic mutations might indicate a higher likelihood of success with targeted therapy drugs, leading to personalized treatment plans that are more likely to be effective.

- Risk Assessment: Predictive models also assist in risk stratification, identifying patients who are at higher risk of developing severe forms of cancer, thereby prioritizing monitoring and preventative treatments.

Integrative Analysis

- Combining Datasets: Machine learning facilitates the integration of genetic data with clinical records, imaging results, and laboratory tests. This holistic approach allows for a more comprehensive analysis of a patient’s condition, considering all possible factors influencing their health.

- Enhanced Diagnostics: Integrating diverse datasets helps in diagnosing complex cases where symptoms may be ambiguous, and traditional diagnostic methods fall short. This comprehensive view leads to more accurate diagnoses and better patient management.

- Research and Development: In research settings, integrative analysis helps identify new potential therapeutic targets by linking genetic data with disease mechanisms observed in clinical and imaging data.

Impact and Advancements

Machine learning’s role in leveraging genetic data is a cornerstone of the move towards more personalized medicine. As these technologies advance, they are expected to become more integrated into routine clinical practice, leading to improvements in patient outcomes through more precise and tailored treatment strategies. Furthermore, as machine learning algorithms become better at handling the complexity and variability of genetic data, their applications in medical research and clinical practice will expand, potentially revolutionizing how diseases are understood and treated.

Known Outcomes in Machine Learning for Medical Diagnostics

In the realm of machine learning, particularly in medical applications such as cancer diagnosis, the use of “known outcomes” is essential. Known outcomes refer to the labels assigned to data points that indicate the results of previous medical evaluations, such as whether a tumor is benign or malignant. These outcomes play a crucial role in the training and effectiveness of supervised learning models. Here’s a deeper exploration of this concept:

Importance of Known Outcomes

- Training Supervised Models:

- Learning from Examples: In supervised learning, models are trained using a dataset that includes both input features (such as genetic markers, imaging data, and patient demographics) and known outcomes. The model learns by analyzing these examples to identify patterns or features that correlate with specific outcomes.

- Labeling: The labels or known outcomes in the training set guide the model in making predictions. For instance, if a dataset includes MRI images labeled as ‘benign’ or ‘malignant’, the model learns to classify new images based on the patterns it recognized in the training set.

- Model Evaluation:

- Accuracy Assessment: Known outcomes are used to evaluate the accuracy of the machine learning model. By comparing the model’s predictions with the actual outcomes, researchers can determine how well the model is performing.

- Validation and Testing: The dataset is typically divided into subsets for training, validation, and testing. Known outcomes in the validation and testing sets help ensure that the model generalizes well to new, unseen data, not just the data on which it was trained.

- Enhancing Predictive Power:

- Refinement of Models: Known outcomes allow for the refinement of machine learning models through techniques such as parameter tuning and model selection. By understanding how different configurations affect prediction accuracy, developers can optimize models for better performance.

- Feature Importance: Analysis of how different features influence the known outcomes can help in understanding which variables are most predictive of cancer, guiding further research and diagnostics.

Challenges with Known Outcomes

- Data Quality and Consistency:

- Label Accuracy: The reliability of machine learning predictions is heavily dependent on the accuracy of the known outcomes used during training. Incorrect labels can mislead the model, resulting in poor performance.

- Consistent Standards: Variability in diagnostic criteria and techniques across different medical facilities can lead to inconsistencies in outcome labeling, complicating the training process.

- Ethical Considerations:

- Bias and Fairness: Models trained on datasets with biased known outcomes may replicate or amplify these biases. It’s essential to ensure that the training data represents a diverse patient population to avoid biased predictive models.

Known outcomes are fundamental in the training of supervised machine learning models in medicine. They enable models to learn from past cases, improving their ability to make accurate predictions about new cases. Ensuring the quality and integrity of these outcomes is critical for developing robust, reliable, and fair diagnostic tools. As machine learning continues to evolve, the use of known outcomes will remain a key component in advancing medical diagnostics, offering the potential to save lives through earlier and more accurate detection of diseases like cancer.

Input Process in Machine Learning Model Training

The input process is a critical phase in the training of machine learning models, especially in complex fields like medical diagnostics. This step involves feeding the prepared and curated data into the machine learning algorithm to begin the learning process. Here’s a detailed explanation of how the input process is managed and its significance:

Managing the Input Process

- Batch Processing:

- Definition: Batch processing involves dividing the dataset into smaller, manageable groups or “batches” of data that the machine learning model processes sequentially.

- Purpose: This technique is particularly useful for handling large datasets that cannot be loaded into memory all at once. By processing data in batches, the model uses computational resources more efficiently, allowing for the training of more complex models on larger datasets.

- Sequential Processing:

- Iterative Learning: As the model processes each batch, it incrementally updates its internal parameters based on the information gained from that batch. This iterative adjustment is part of the learning process where the model gradually refines its ability to predict or classify data accurately.

- Convergence: Through sequential and iterative processing of batches, the model aims to converge to a state where its predictions become stable and accurate. This process is monitored using metrics such as loss functions, which measure how far the model’s predictions are from the actual outcomes.

- Optimizing the Learning Process:

- Learning Rate: Adjusting the learning rate, which determines how much the model’s parameters change with each batch, is crucial. A too-high learning rate can cause the model to converge too quickly to a suboptimal solution, while a too-low rate can slow down the training process excessively.

- Epochs: An epoch occurs when the machine learning model has processed all batches of data once. Multiple epochs are typically necessary, allowing the model to process the entire dataset multiple times to ensure comprehensive learning.

- Handling Overfitting and Underfitting:

- Validation Split: To prevent overfitting, where the model performs well on training data but poorly on unseen data, part of the dataset is often held back as a validation set. This set is not used for training but to evaluate model performance after each epoch.

- Regularization Techniques: Techniques such as dropout, where randomly selected neurons are ignored during training, or L1 and L2 regularization, which add a penalty on the size of the coefficients, are used to prevent the model from becoming overly complex.

Challenges in the Input Process

- Data Skewness and Bias: Care must be taken to ensure that the batches of data are representative of the overall dataset. Skewed or biased batches can lead the model to develop biased or inaccurate generalizations.

- Hardware Limitations: The size of the batches and the complexity of the model are often limited by the available computational resources, such as GPU memory and processing power.

The input process in machine learning is foundational to effective model training, requiring careful consideration of batch size, processing sequence, and iteration management to optimize learning. This process not only ensures efficient use of computational resources but also significantly impacts the accuracy and reliability of the model in clinical applications. By managing this process effectively, practitioners can develop robust machine learning models capable of making precise diagnoses and predictions in medical diagnostics.

Feature Selection in Machine Learning Models

Feature selection is a crucial step in the development of machine learning models, particularly in complex domains like healthcare, where datasets can include a wide range of variables from patient demographics to detailed genomic data. This process involves identifying and selecting those features that are most relevant to the predictive task at hand, thereby improving model performance, reducing overfitting, and speeding up training times. Here’s an in-depth look at the process and techniques involved in feature selection:

Importance of Feature Selection

- Enhancing Model Performance: By removing irrelevant or redundant data, feature selection helps to improve the model’s accuracy and effectiveness. This is because less noise in the data means the model can more easily detect the true underlying patterns.

- Reducing Overfitting: Fewer data features mean there’s less chance of the model fitting excessively to the noise in the training set. This enhances the model’s ability to generalize to new, unseen data.

- Increasing Computational Efficiency: With fewer variables to process, models require less computational power and time to train, which is crucial when dealing with large datasets typical in medical applications.

Feature Selection Techniques

Filter Methods in Feature Selection

Filter methods are one of the primary techniques used in feature selection for machine learning models, particularly popular in the initial stages of data preprocessing. They rely on statistical measures to evaluate the importance of various features without involving the machine learning algorithms themselves. Here’s a deeper look into how these methods work, their advantages, and limitations:

Statistical Tests for Feature Selection

- Chi-square Test:

- Application: Typically used for categorical data, the chi-square test evaluates the independence between categorical features and the target variable. It determines whether the presence (or absence) of a particular feature is statistically significantly associated with the target outcome.

- ANOVA (Analysis of Variance):

- Application: ANOVA is used to determine if there are statistically significant differences between the means of three or more independent groups. In feature selection, it can help in identifying features where the means across different categories of the target variable are different.

- Correlation Coefficients:

- Application: This involves assessing the strength and direction of the linear relationships between numerical features and the target variable. Pearson correlation is common for continuous data, while Spearman’s rank correlation is used for ordinal data.

Advantages of Filter Methods

- Computational Efficiency: Since filter methods do not involve training a machine learning model, they are computationally less intensive and faster to execute, making them suitable for datasets with a high number of features.

- Scalability: These methods can be easily scaled to large datasets, which is beneficial in fields like bioinformatics or medical imaging where high-dimensional data is common.

- Simplicity and Versatility: Filter methods are straightforward to understand and implement. They can be used as a standalone process or combined with other feature selection techniques to enhance model performance.

Limitations of Filter Methods

- Independence from Model: While being independent from the machine learning models can be an advantage in terms of speed and simplicity, it also means that the selection of features does not take into account how they interact with the model. Consequently, some important features might be discarded if they are only effective in combination with others.

- No Interaction Consideration: Filter methods do not account for interactions between features. This can lead to the retention of redundant features or exclusion of features that would have been selected based on their combined effect with others.

- Pre-Processing Step: Being a pre-processing step, filter methods are detached from the modeling process, which might lead to a mismatch between the selected features and those that would be optimal for the specific algorithms used later in the modeling.

Despite their limitations, filter methods remain a valuable tool in the arsenal of data scientists, especially during the exploratory data analysis phase where understanding and reducing the feature space is critical. Their ability to quickly reduce the dimensionality of a dataset while preserving relevant information makes them an indispensable first step in many predictive modeling tasks, particularly in scenarios where computational resources or time are limited.

Wrapper Methods in Feature Selection

Wrapper methods are a sophisticated approach to feature selection in machine learning. They use a predictive model to evaluate the effectiveness of subsets of features and select the best-performing combination based on specific performance criteria. Here’s a detailed exploration of how wrapper methods operate, including their techniques, advantages, and limitations:

Techniques in Wrapper Methods

- Recursive Feature Elimination (RFE):

- Process: RFE involves training a model and then iteratively removing the least significant features (usually based on model weights or coefficients), retraining the model after each removal. This process continues until the desired number of features is reached or when model performance no longer improves.

- Forward Selection:

- Process: This technique starts with an empty model and adds features one by one. At each step, the feature that provides the most significant improvement to the model performance is retained. The process continues until no further improvements can be made.

- Backward Elimination:

- Process: Opposite to forward selection, backward elimination starts with all the features and systematically removes the least significant feature at each step, based on certain performance criteria. The process stops when removing more features worsens the performance.

Advantages of Wrapper Methods

- Performance Optimization: Since wrapper methods involve the use of a specific machine learning model to assess feature subsets, they often yield higher performance than filter methods because they account for how features interact within the context of the model.

- Feature Interaction: These methods can identify not just individual feature contributions but also interactions between features that might be critical for predictive accuracy.

- Customizable to Model: Wrapper methods can be tailored to the specifics of the machine learning model used, making them very effective in optimizing model-specific performance.

Limitations of Wrapper Methods

- Computational Intensity: Wrapper methods can be computationally expensive because they require multiple models to be trained for different combinations of features. This can be particularly taxing when dealing with large datasets or complex models.

- Risk of Overfitting: There is a potential risk of overfitting to the training data, especially if the feature selection process leads to a model that is excessively tuned to the specifics of the training set.

- Scalability Issues: Due to their computational demands, wrapper methods can be challenging to scale to very large datasets or feature sets without significant computational resources or time.

Wrapper methods provide a powerful approach to feature selection, especially when model performance is of paramount importance. Their ability to integrate the selection process with model training helps in precisely identifying the most relevant features for the model. However, their computational cost and potential for overfitting are important considerations. In practice, they are often used when the dataset size is manageable, or when computational resources are sufficient to support the intensive calculations required.

Embedded methods in feature selection integrate the process directly into the model training, utilizing the inherent properties of specific machine learning algorithms. These methods modify the algorithm itself to penalize less important features, effectively integrating feature selection with model training. One prominent example is the Least Absolute Shrinkage and Selection Operator (LASSO), which incorporates a penalty term related to the absolute value of the coefficients, forcing some to shrink to zero. This direct integration allows for efficient, nuanced feature selection that considers feature interactions.

How Embedded Methods Work

LASSO (Least Absolute Shrinkage and Selection Operator)

- Mechanism: LASSO introduces a penalty term to the regression model proportional to the absolute value of the coefficients. This penalty encourages the reduction of certain coefficients to zero, effectively selecting a simpler, more interpretable model.

- Usage: Commonly applied in scenarios with many predictors, LASSO helps in selecting a subset of features that provides the best prediction while maintaining model simplicity.

Advantages of Embedded Methods

- Efficiency: These methods are computationally more efficient than wrapper methods as they do not require training multiple separate models.

- Feature Interactions: Embedded methods consider how features interact within the model, potentially leading to more accurate and relevant feature selection.

- Automation: The process is automated within the training algorithm, simplifying the overall modeling process and reducing the need for manual feature selection.

Limitations of Embedded Methods

- Dependency on Model: The effectiveness of these methods is closely tied to the specific learning algorithm used, limiting flexibility.

- Sensitivity to Parameters: The performance can vary significantly based on the settings of regularization parameters, requiring careful tuning.

- Potential for Bias: The selected features may be biased towards those that are most effective for the specific model used, not necessarily those most predictive in general.

Tree-Based Methods as Embedded Feature Selection

Decision Trees and Ensembles

- Decision Trees: Feature selection occurs naturally as the tree is built, with the algorithm choosing the best features to split at each node.

- Random Forests: Builds multiple decision trees on random subsets of features, averaging their predictions. Feature importance is derived from how often features are used to split nodes.

- Gradient Boosting Machines (GBMs): Builds trees sequentially, each one focusing on correcting errors from the previous trees. Features chosen to split in each tree contribute to minimizing the overall loss.

Advantages of Tree-Based Methods

- Integrated Feature Selection: These methods naturally eliminate irrelevant features by selecting only the most useful ones for making splits.

- Handling of Non-linear Relationships: Effective in modeling complex, non-linear relationships that might be overlooked by linear models.

- Model Interpretability: Especially with fewer trees, the rationale behind predictions is clearer, making these models more transparent.

Limitations of Tree-Based Methods

- Overfitting: Particularly with deep single trees, there is a risk of fitting too closely to the training data.

- Computational Intensity: Training ensembles like Random Forests and GBMs requires significant computational resources.

- Randomness: The random selection of features can introduce variability in feature importance across different model runs.

Embedded methods, including LASSO and tree-based algorithms, offer a streamlined approach to feature selection that is inherently part of the model training process. This integration helps in enhancing the efficiency and effectiveness of the model, although the choice of algorithm and its parameters plays a critical role in the success of these methods.

Pattern Recognition in Machine Learning

Pattern recognition is the core mechanism by which machine learning models operate, especially in applications such as medical diagnostics. This process involves the model learning to identify and utilize patterns within the data to make predictions or decisions. Here’s how it specifically applies to identifying tumors in medical imaging or genetic data:

- Identification of Patterns: As the model processes the input data (such as imaging or genetic information), it starts to recognize specific patterns and relationships. For example, it might discover that certain textures or shapes in an image are commonly associated with malignant tumors or that specific genetic markers are frequently present in cancerous cells but absent in benign conditions.

- Use of Mathematical and Statistical Methods: The model’s ability to recognize these patterns is powered by a variety of mathematical and statistical methods, each suited to different types of data and diagnostic requirements:

- Regression Analysis: This method can identify relationships between variables and outcomes. For instance, logistic regression might be used to determine the likelihood of a tumor being malignant based on certain observable features.

- Decision Trees: These are used to make sequential decisions that split the data into branches, leading to a classification. Decision trees can easily handle varied data types and can be visualized, making them particularly useful in clinical settings where explanations are necessary.

- Neural Networks: Particularly deep learning models, which are excellent at processing complex patterns in large volumes of data, such as detailed imaging data. Neural networks can learn to recognize subtle distinctions in tumor images that might not be evident to the human eye.

- Learning from Examples: The machine learning model adjusts its parameters each time it processes new data. This adjustment is based on the accuracy of its predictions against known outcomes. For example, if a model incorrectly predicts a benign tumor as malignant, it learns from this error, adjusting its internal parameters to improve accuracy for similar cases in the future.

- Feature Importance: Through pattern recognition, the model also learns which features (e.g., specific imaging characteristics or genetic markers) are most important in predicting the outcome. This insight is crucial for clinicians to understand which factors are most indicative of malignancy.

Benefits of Pattern Recognition

- Enhanced Diagnostic Accuracy: By accurately identifying which characteristics of tumors are associated with malignancy, machine learning models can improve the precision of diagnoses.

- Personalized Medicine: Pattern recognition allows for more personalized healthcare, as models can predict how individual patients’ tumors might behave and respond to treatments based on historical data.

- Efficiency in Healthcare: Automating part of the diagnostic process with machine learning can lead to faster diagnosis and treatment, potentially saving lives by catching diseases earlier.

Iterative Improvement in Machine Learning

The process of training a machine learning model is inherently iterative. This means that the model doesn’t just pass through the training data once and complete its learning; instead, it repeatedly cycles through the data, refining and adjusting its internal parameters with each iteration. This method is crucial for developing a robust model that can accurately predict or diagnose based on the data it receives. Here’s how iterative improvement works in practice:

- Initial Learning: The model starts with an initial set of parameters, often randomly assigned. It makes predictions based on these parameters and then checks these predictions against the actual outcomes.

- Error Calculation: After making predictions, the model calculates the error, which is the difference between its predictions and the actual outcomes. This error measurement guides the model in adjusting its parameters.

- Parameter Adjustment: Using algorithms such as gradient descent, the model adjusts its parameters to minimize the error. For example, if a particular feature weight leads to higher errors in tumor classification, the model will adjust this weight to decrease the error in subsequent iterations.

- Repetition of Cycles: The model continues to pass through the training data multiple times. Each pass is called an epoch, and with each epoch, the model refines its parameters further, learning more accurately which features are most indicative of benign or malignant tumors.

- Convergence: Over time, the adjustments lead to smaller and smaller changes in the error rate, eventually stabilizing when the model reaches convergence. At this point, the model’s predictions are as close as they can get to the actual data, given the algorithm and features used.

- Validation and Testing: Even after the model has converged, it’s essential to test it on separate validation and testing datasets to ensure that it hasn’t just memorized the training data but can generalize well to new, unseen data.

Benefits of Iterative Improvement

- Enhanced Accuracy: By continually refining its approach, the model becomes more accurate in its predictions. This is crucial in medical settings where diagnostic accuracy can significantly impact patient outcomes.

- Adaptability: Iterative improvement allows the model to adapt to the complexity of medical data, learning subtle patterns that might be missed in a single pass through the data.

- Optimized Performance: Through iterative learning, the model can optimize its performance, balancing between underfitting and overfitting, which ensures it performs well in real-world scenarios.

Challenges

- Computational Cost: Iterative processes require significant computational resources, especially with large datasets or complex models like deep neural networks.

- Overfitting Risk: If not monitored carefully, the model might adapt too specifically to the training data, capturing noise rather than the underlying patterns, which impairs its performance on new data.

Iterative improvement is a cornerstone of machine learning training, allowing models to progressively refine their ability to make predictions or classifications, such as distinguishing between benign and malignant tumors. This method ensures that the model not only learns effectively but also adapts to the nuances of the specific medical application it is designed for.